In this interview, we had the pleasure of sitting down with NOVO Energy to delve into their experience with ClickHouse and ClickHouse Cloud on AWS. We discussed the challenges they faced before discovering ClickHouse, the impact it has had on their data analytics, and how it has transformed the way their team operates. Their insights offer a firsthand look at how ClickHouse’s speed, scalability, and flexibility drive innovation and efficiency in their organization. Enjoy this deep dive into their journey with ClickHouse, and perhaps find inspiration for your own data challenges.

Do you mind introducing yourself?

My name is Martin Hardselius and I’ve been a software engineer for a little more than 12 years. During my career, I’ve worked in several different companies and industries, including online identity, gambling, telco, consumer electronics, big companies, small startups, etc. I started out as a backend engineer, expanded into infrastructure and site reliability, and now I find myself in the domain of battery research and industrial IoT, which could be viewed as a souped-up version of home automation, doing work across the entire stack.

I joined NOVO Energy on May 2nd, 2022, as one the very first employees. That is kind of funny considering the only person who knew anything about making batteries in the company at that time was our CTO, Eerik Hantsoo. But he had a vision of how a robust data platform would play a central role in enabling good research, which is why he brought me on before anyone who actually knew anything about batteries. Now, more than two years in, my knowledge of the domain has grown, and I have the privilege of working with some very smart people, solving interesting problems using exciting technology.

What is the origin story of the company or the team within the company? What problem are you solving?

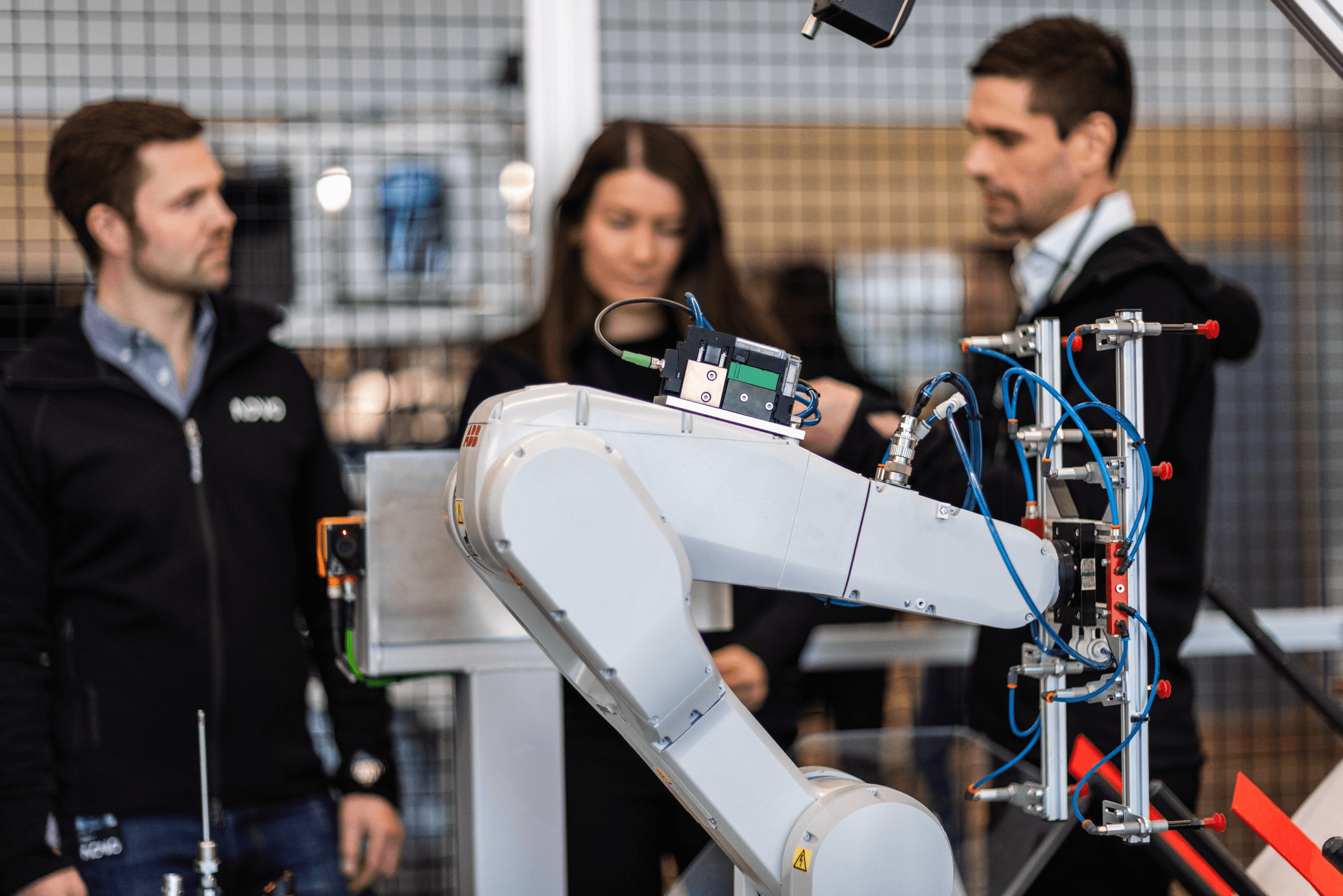

NOVO Energy is a joint venture between Northvolt and Volvo Cars. Our mission is to develop and produce sustainable batteries, tailored to power the next generation of pure electric Volvo cars. In our R&D facility, we collect large amounts of data from equipment, research instruments, and assembly machinery. This data feeds into the analysis of the cells we produce.

What is most important when you tell your, or your team, story?

The purpose of the software engineering team within NOVO Energy R&D is to internally democratize access to research data.

The data we collect typically ends up in whatever storage our vendors have picked to back their software. We’re writing software that copies that data into our own database, where we are then free to query and analyze using tools that are available to everyone in our organization. We centralize our data so that we can streamline our tooling. We standardize our data to reduce the risk of errors in our calculations. We democratize access to our data to remove unnecessary gatekeeping. We generalize our representations so that data can be compared across different experiments, regardless of which test equipment was used.

What requirements did you have for the database/store component(s) in your architecture?

Our primary business requirement for any database/store was that we own and control the data we store — no vendor lock-in and no walled gardens.

Luckily, that’s not the most demanding requirement to fulfill. Regarding performance, we were looking for a solution that allowed us to query for a few million rows out of a dataset of billions of rows in a reasonable time without spending too much time tuning it. Additionally, we wanted a low-maintenance or managed solution where basic hygiene like backups was part of the package, that’s easy to integrate with other tools, such as Grafana or BI solutions, a familiar query language, and good language support for at least Rust and Python.

Tell us about your discovery of ClickHouse. How did you hear about it, and what made you excited to give it a try? What were your hesitations?

We stumbled upon ClickHouse’s booth at AWS Summit Stockholm in 2023. One of the team members had seen the name before on Hacker News so we decided to approach. After a brief discussion with the two gentlemen at the booth, we realized that ClickHouse was highly relevant to our use case.

How did you evaluate Clickhouse? How did ClickHouse perform against the alternatives considered?

Our first evaluation of ClickHouse consisted of taking it on a spin on one of our laptops, seeding it with our current data and then playing around with queries. Already at this stage, we were happy with the performance of ClickHouse because we were seeing 10-100x performance gains at this stage. From there on we decided to try out ClickHouse Cloud free tier and after further evaluation we quickly upgraded to a paid tier.

What alternative databases did you consider?

Prior to ClickHouse we were using an on-premise installation of TimescaleDB, which served us well, but we had not spent any time on right-sizing the hardware or tuning the database. When considering alternatives to that, we were mainly looking at managed offerings that would fit our architecture. Using AWS RDS to back our analytical queries was not something we wanted to do and we also considered a solution involving AWS S3, AWS Glue and Amazon Redshift, but we felt that it was a bit over engineered for our scale of operation.

What were the considerations when weighing the use of cloud vs self-managed ClickHouse?

As previously stated, and since we’re a small team with limited time, we were looking for a low-maintenance or managed solution. Going with ClickHouse Cloud on AWS was an easy decision because it allows us to focus on our primary work without having to worry about maintenance, upgrades, backups, and so on.

Can you share some quantitative metrics about ClickHouse’s performance (e.g., ingest/query latency/overall data volumes/cost efficiency)?

At the time of writing, we’re sitting on just above 400M rows of electrochemical test data. We don’t think this is a lot considering we’re still in a ramp-up phase. As we commission new test equipment and push our tester utilization rate up, this number will greatly grow. When we’re fully ramped up we might have around 1300 battery cells on test at any given time. The equipment will likely generate new data at roughly 0.1 Hz. With some auxiliary sensors, sampled at the same frequency, associated with a good portion of the battery cells we’re probably looking at around 2000 new records to be inserted every 10 seconds. On top of that, we’ll be gathering telemetry data from our process equipment and the BMS (Building Management System). That may be another 200 records at 0.1 Hz. We’re batching our inserts to happen about once per minute, so now we’re looking at 12-13K records per minute. That’s not a lot of data, but insert performance was never our highest priority when picking a database. What’s more critical is query performance. For every test we’re processing, we constantly check the latest record we’ve already processed. This will tell us when to request new data from our battery cyclers. This query looks something like this

SELECT channel, test_id, max(sequence_number), max(observed_at)

FROM cycler_data GROUP BY test_id

With a growing number of tests and every test potentially having months’ worth of data, the performance of this query becomes increasingly important. At the moment, this query takes about 0.1 seconds with just over 4400 (and 4M rows) tests in the database. Without the projection, it takes more than 17 seconds.

Looking forward, what’s next for you (and your use of ClickHouse)?

At this stage, we have most of the plumbing for our data pipelines in place. We are experimenting with post-processing pipelines, where we push aggregate results from ClickHouse to either separate ClickHouse tables or PostgreSQL. Of course, we will continue to monitor our system’s performance. As the number of tests and test records grows over time, we will likely need to optimize different aspects of our setup.

Also worth noting is that we’re using ClickHouse to store logs from our services.

The amount of data is negligible compared to the lab telemetry we’re gathering, but that might grow if we decide to ingest logs from various equipment and machines too. We’re using a setup heavily inspired by (Uber) and it has served us well as a replacement for CloudWatch. Log analytics is something we haven’t explored much, but could potentially be attractive for monitoring our operations.

How would you describe ClickHouse in 3 words?

Simple, pleasant, performant.